jinfagang / yolov7

- пятница, 1 июля 2022 г. в 00:34:20

🔥 🔥 🔥 🔥 YOLO with Transformers and Instance Segmentation, with TensorRT acceleration! 🔥 🔥 🔥

Documentation • Installation Instructions • Deployment • Contributing • Reporting Issues

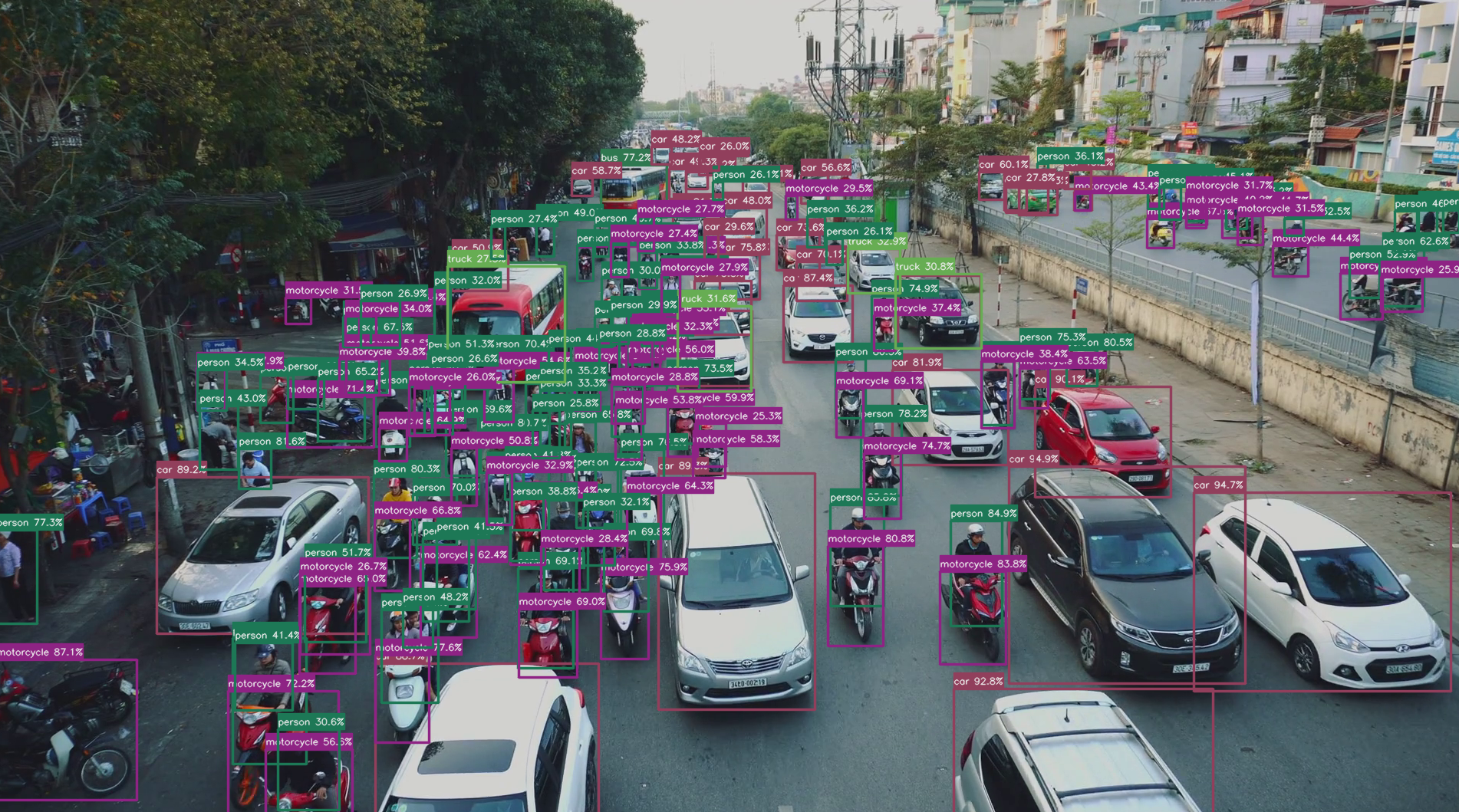

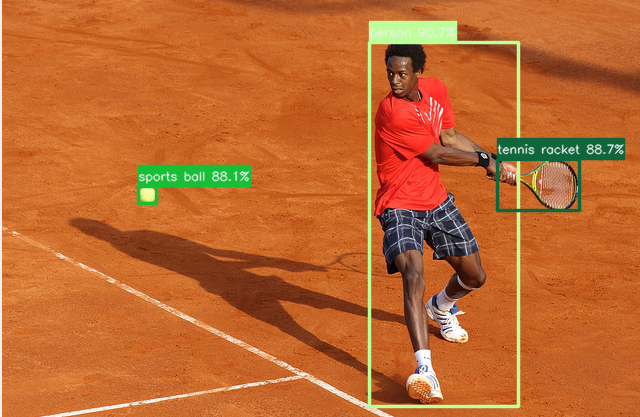

In short: YOLOv7 added instance segmentation to YOLO arch. Also many transformer backbones, archs included. If you look carefully, you'll find our ultimate vision is to make YOLO great again by the power of transformers, as well as multi-tasks training. YOLOv7 achieves mAP 43, AP-s exceed MaskRCNN by 10 with a convnext-tiny backbone while simillar speed with YOLOX-s, more models listed below, it's more accurate and even more lighter!

detectron2. But note that YOLOv7 doesn't meant to be a successor of yolo family, 7 is just a magic and lucky number. Instead, YOLOv7 extend yolo into many other vision tasks, such as instance segmentation, one-stage keypoints detection etc..

The supported matrix in YOLOv7 are:

⚠️ Important note: YOLOv7 on Github not the latest version, many features are closed-source but you can get it from https://manaai.cn

Features are ready but not opensource yet:

If you want get full version YOLOv7, either become a contributor or get from https://manaai.cn .

SparseInst onnx expport!If you have spare time or if you have GPU card, then help YOLOv7 become more stronger! Here is the guidance of contribute:

Claim task: I have some ideas but do not have enough time to do it, if you want implement it, claim the task, I will give u fully advise on how to do, and you can learn a lot from it;Test mAP: When you finished new idea implementation, create a thread to report experiment mAP, if it work, then merge into our main master branch;Pull request: YOLOv7 is open and always tracking on SOTA and light models, if a model is useful, we will merge it and deploy it, distribute to all users want to try.Here are some tasks need to be claimed:

Just join our in-house contributor plan, you can share our newest code with your contribution!

| YOLOv7 Instance | Face & Detection |

|---|---|

|

|

|

|

|

|

|

|

|

|

|

|

Special requirements (other version may also work, but these are tested, with best performance, including ONNX export best support):

If you using lower version torch, onnx exportation might not work as our expected.

Some highlights of YOLOv7 are:

We are strongly recommend you send PR if you have any further development on this project, the only reason for opensource it is just for using community power to make it stronger and further. It's very welcome for anyone contribute on any features!

| model | backbone | input | aug | APval | AP | FPS | weights |

|---|---|---|---|---|---|---|---|

| SparseInst | R-50 | 640 | ✘ | 32.8 | - | 44.3 | model |

| SparseInst | R-50-vd | 640 | ✘ | 34.1 | - | 42.6 | model |

| SparseInst (G-IAM) | R-50 | 608 | ✘ | 33.4 | - | 44.6 | model |

| SparseInst (G-IAM) | R-50 | 608 | ✓ | 34.2 | 34.7 | 44.6 | model |

| SparseInst (G-IAM) | R-50-DCN | 608 | ✓ | 36.4 | 36.8 | 41.6 | model |

| SparseInst (G-IAM) | R-50-vd | 608 | ✓ | 35.6 | 36.1 | 42.8 | model |

| SparseInst (G-IAM) | R-50-vd-DCN | 608 | ✓ | 37.4 | 37.9 | 40.0 | model |

| SparseInst (G-IAM) | R-50-vd-DCN | 640 | ✓ | 37.7 | 38.1 | 39.3 | model |

| SparseInst Int8 onnx | google drive |

| model | backbone | input | aug | AP | AP50 | APs | FPS | weights |

|---|---|---|---|---|---|---|---|---|

| YoloFormer-Convnext-tiny | Convnext-tiny | 800 | ✓ | 43 | 63.7 | 26.5 | 39.3 | model |

| YOLOX-s | - | 800 | ✓ | 40.5 | - | - | 39.3 | model |

note: We post AP-s here because we want to know how does small object performance in related model, it was notablely higher small-APs for transformer backbone based model! Some of above model might not opensourced but we provide weights.

Run a quick demo would be like:

python3 demo.py --config-file configs/wearmask/darknet53.yaml --input ./datasets/wearmask/images/val2017 --opts MODEL.WEIGHTS output/model_0009999.pth

Run SparseInst:

python demo.py --config-file configs/coco/sparseinst/sparse_inst_r50vd_giam_aug.yaml --video-input ~/Movies/Videos/86277963_nb2-1-80.flv -c 0.4 --opts MODEL.WEIGHTS weights/sparse_inst_r50vd_giam_aug_8bc5b3.pth

an update based on detectron2 newly introduced LazyConfig system, run with a LazyConfig model using:

python3 demo_lazyconfig.py --config-file configs/new_baselines/panoptic_fpn_regnetx_0.4g.py --opts train.init_checkpoint=output/model_0004999.pth

For training, quite simple, same as detectron2:

python train_net.py --config-file configs/coco/darknet53.yaml --num-gpus 8

If you want train YOLOX, you can using config file configs/coco/yolox_s.yaml. All support arch are:

There are some rules you must follow to if you want train on your own dataset:

tools/compute_anchors.py, this applys to any other anchor-based detection methods as well (EfficientDet etc.);Make sure you have read rules before ask me any questions.

detr:python export_onnx.py --config-file detr/config/file

this works has been done, inference script included inside tools.

AnchorDETR:anchorDETR also supported training and exporting to ONNX.

SparseInst:

Sparsinst already supported exporting to onnx!!python export_onnx.py --config-file configs/coco/sparseinst/sparse_inst_r50_giam_aug.yaml --video-input ~/Videos/a.flv --opts MODEL.WEIGHTS weights/sparse_inst_r50_giam_aug_2b7d68.pth INPUT.MIN_SIZE_TEST 512

If you are on a CPU device, please using:

python export_onnx.py --config-file configs/coco/sparseinst/sparse_inst_r50_giam_aug.yaml --input images/COCO_val2014_000000002153.jpg --verbose --opts MODEL.WEIGHTS weights/sparse_inst_r50_giam_aug_2b7d68.pth MODEL.DEVICE 'cpu'

Then you can have weights/sparse_inst_r50_giam_aug_2b7d68_sim.onnx generated, this onnx can be inference using ORT without any unsupported ops.

Here is a dedicated performance compare with other packages.

tbd.

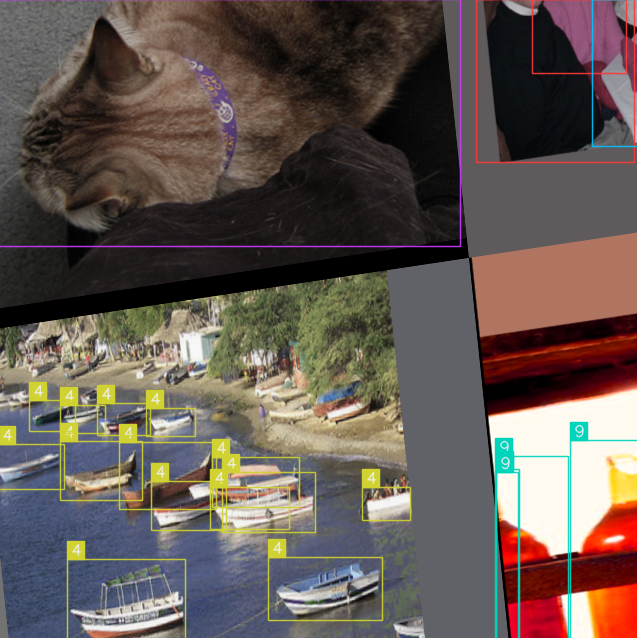

configs/wearmask to train this dataset.| Image | Detections |

|---|---|

|

|

|

|

|

|

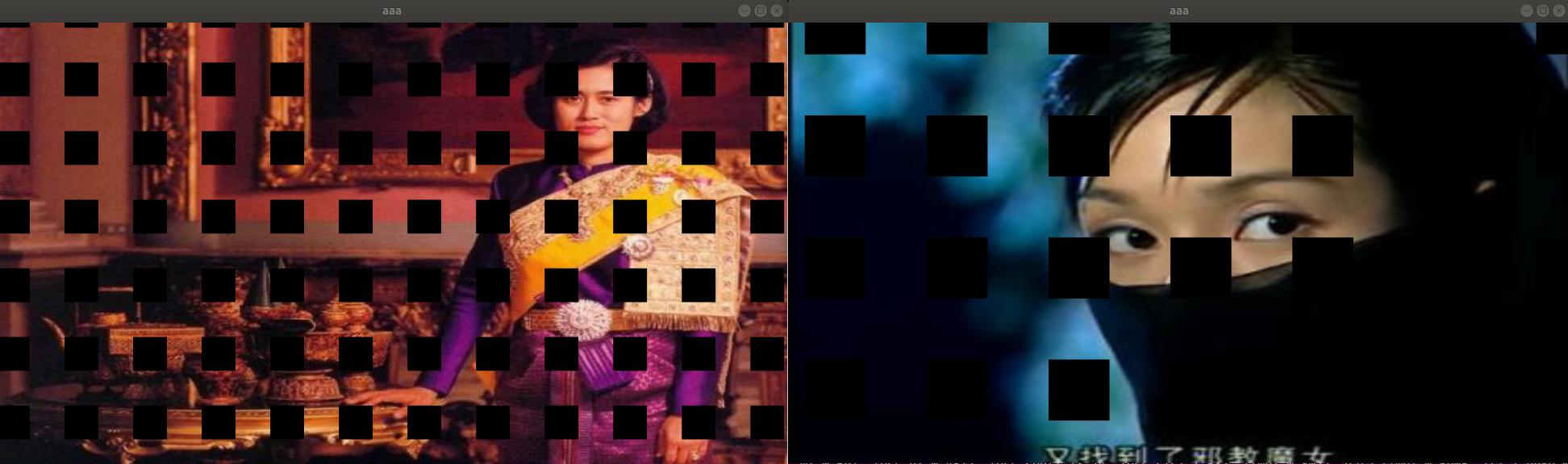

| GridMask | Mosaic |

|---|---|

|

|

|

|

|

|

Code released under GPL license. Please pull request to this source repo before you make your changes public or commercial usage. All rights reserved by Lucas Jin.